How to Quantify Your RAG Chatbot's Quality with DeepEval - Andy Primawan

You've built a powerful RAG chatbot, but how do you prove it's any good? Move beyond subjective checks and learn how to implement an automated, quantitative evaluation pipeline using DeepEval and Pytest. This guide shows you how, no matter what language your chatbot is written in.

Introduction

So you’ve done it. After weeks of work, your Retrieval-Augmented Generation (RAG) chatbot is up and running. It's connected to a vector database, it pulls relevant context, and it generates impressive-sounding answers to user queries. You push it to a staging environment, run a few manual tests, and it seems to work.

But then, the questions start creeping in. When you change the prompt, does the answer quality improve or decline? If you tweak the chunking strategy, how does that really affect the context retrieval? Is the answer "good" just because it sounds plausible, or is it factually grounded in the documents you provided?

Manual spot-checking feels subjective and isn't scalable. You need numbers. You need a quality gate.

The Problem: The Ambiguity of "Good"

As full-stack engineers, we're used to a world of deterministic tests. If 2 + 2 returns 4, the test passes. If an API endpoint returns a 200 OK with the correct JSON schema, it's green.

Evaluating a RAG application is different. The quality is a messy, multi-faceted concept that includes:

- Answer Relevancy: Measures how relevant the model’s actual output is to the given input, with an LLM-provided explanation for the score.

- Contextual Precision: Evaluates whether relevant context nodes are ranked higher than irrelevant ones in retrieval, with a self-explained rationale.

- Faithfulness: Checks if the model’s output factually aligns with the provided retrieval context, accompanied by an LLM rationale.

- Contextual Recall: Measures how well the retrieved context covers what’s needed for the expected output, with an explanatory justification.

- Contextual Relevancy: Assesses the overall relevance of the retrieved context to the input, with an LLM-generated explanation.

Without a way to measure these factors, you're flying blind. Every "improvement" is a guess, and you run the constant risk of introducing regressions that degrade the user experience in subtle ways. How can we build a repeatable, objective, and automated quality gate for our RAG application?

The Solution: A Decoupled Evaluation Pipeline

The answer is to treat evaluation as a separate service that interacts with your RAG application over a simple API contract. This is brilliant because your main application can remain in whatever language you built it in—be it Go, Node.js, Rust, or Java.

Our evaluation suite will be written in Python using two powerful tools: pytest for the test framework and DeepEval for the AI evaluation metrics.

Here’s the step-by-step blueprint.

Step 1: Expose Two Simple REST APIs

The only change you need to make to your existing application is to expose two endpoints:

- A Context Retrieval Endpoint: Given a user query, this API should return the list of context chunks your retriever pulls from the vector database.

- A Final Response Endpoint: Given a user query, this API should return the final, generated answer from the RAG pipeline.

For example, your API contract might look like this:

Endpoint 1: Context RetrievalPOST /retrieve-context

- Request Body:

{"query": "What is the warranty period?"}

Success Response (200 OK):

{

"contexts": [

"Chunk 1: The standard warranty period for all electronic products is 12 months from the date of purchase...",

"Chunk 2: Extended warranties can be purchased separately for an additional 24 months...",

"Chunk 3: Our return policy states that items can be returned within 30 days..."

]

}

Endpoint 2: RAG ResponsePOST /generate-response

- Request Body:

{"query": "What is the warranty period?"}

Success Response (200 OK):

{

"answer": "The standard warranty period for electronic products is 12 months from the date of purchase. You also have the option to buy an extended warranty for an additional 24 months."

}

Step 2: Build Your Golden Dataset

A "golden dataset" is your source of truth. It's a collection of test cases, each containing a query and the ideal, "perfect" response you expect the system to provide. This is the most important (and often most time-consuming) part, but it's an investment that pays huge dividends.

Create a simple YAML file, for example let's call it dojotek-ai-chatbot-rag-deepeval-dataset.yaml:

# RAG evaluation samples

samples:

- query: "Can I get a yellow fever vaccination at Selamat Hospital before traveling abroad?"

reference: "Yes, you can get a yellow fever vaccination at Selamat Hospital before traveling abroad."

- query: "What preparations are needed before undergoing an endoscopy at Selamat Hospital?"

reference: "Before undergoing an endoscopy at Selamat Hospital, you need to fast for 8-12 hours, have a consultation, undergo a full blood test, and provide medical consent."

In case you need the sample knowledge, here the DOCX file I fed to my Dojotek AI Chatbot LLM RAG:

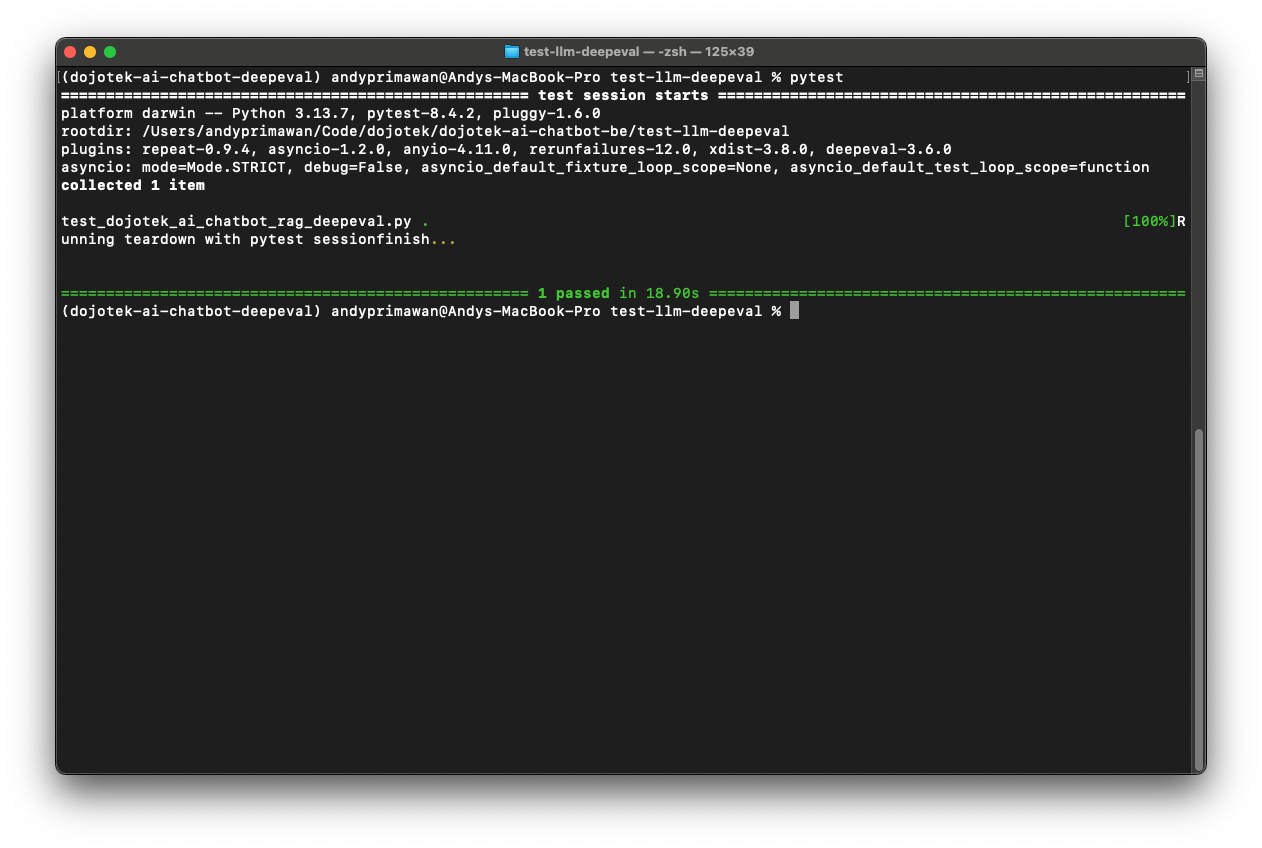

Step 3: Write the Evaluation Suite with Pytest and DeepEval

Now for the fun part. We'll set up a Python project for our evaluation logic.

First, install the necessary libraries:

pip install -r requirements.txt

# You may need to set an API key for the LLM that DeepEval uses for evaluation

# export OPENAI_API_KEY="sk-..."

Next, create your test file, for example test-llm-deepeval/test_dojotek_ai_chatbot_rag_deepeval.py. This script will:

- Load the

dojotek-ai-chatbot-rag-deepeval-dataset.yaml. - Use DeepEval

TestCaseto create a test for each entry in our dataset. - For each query, call your two application endpoints (

/retrieve-contextand/generate-response). - Use

DeepEvalto package thequery,actual_output,expected_response, andretrieved_context. - Assert the results against

DeepEval's metrics likeFaithfulnessandAnswerRelevancy, each with a minimum passing threshold (e.g., 0.8 out of 1.0).

You can check the working Pytest DeepEval code in this GitHub repo:

https://github.com/dojotek/dojotek-ai-chatbot-backend/blob/main/test-llm-deepeval/test_dojotek_ai_chatbot_rag_deepeval.py

Note: DeepEval uses an LLM (like GPT-4) to grade your application's output against the provided context. This is incredibly powerful, but be mindful of the associated API costs.

Step 4: Integrate into Your CI Pipeline

The final step is to make this evaluation an automated quality gate. The key idea is abstract and tool-agnostic:

- Run your main Chatbot AI LLM RAG service with its own RAG pipeline, vector database, and vector chunks (via Docker Compose or your platform’s runtime).

- Run the Pytest DeepEval suite as a separate evaluator that calls your service’s API endpoints and computes metrics.

This pattern works in GitHub Actions, GitLab CI, Jenkins, or any orchestrator. A generic workflow looks like:

- Provision runtime dependencies for the RAG service (database, vector store, Redis).

- Launch the RAG service and wait until its health endpoint is ready.

- Set up Python and install the evaluator dependencies.

- Execute the Pytest DeepEval tests, which call

/retrieve-contextand/generate-responseand enforce metric thresholds.

Now, every change triggers the evaluator. If any metric falls below your threshold, the job fails and prevents regressions from shipping.

Conclusion

By decoupling your evaluation logic from your main application and leveraging tools like DeepEval and pytest, you can transform RAG quality assurance. You move from subjective, manual checks to an automated, quantitative system that provides clear, actionable feedback.

This framework gives you the confidence to iterate quickly. You can experiment with new models, prompts, and retrieval strategies, all while having a safety net that ensures you're not just changing your RAG application—you're verifiably improving it.

Link to Example Code: You can find a complete, runnable example of this setup on GitHub: https://github.com/dojotek/dojotek-ai-chatbot-backend.

To be more specific, check the test-llm-deepeval folder.