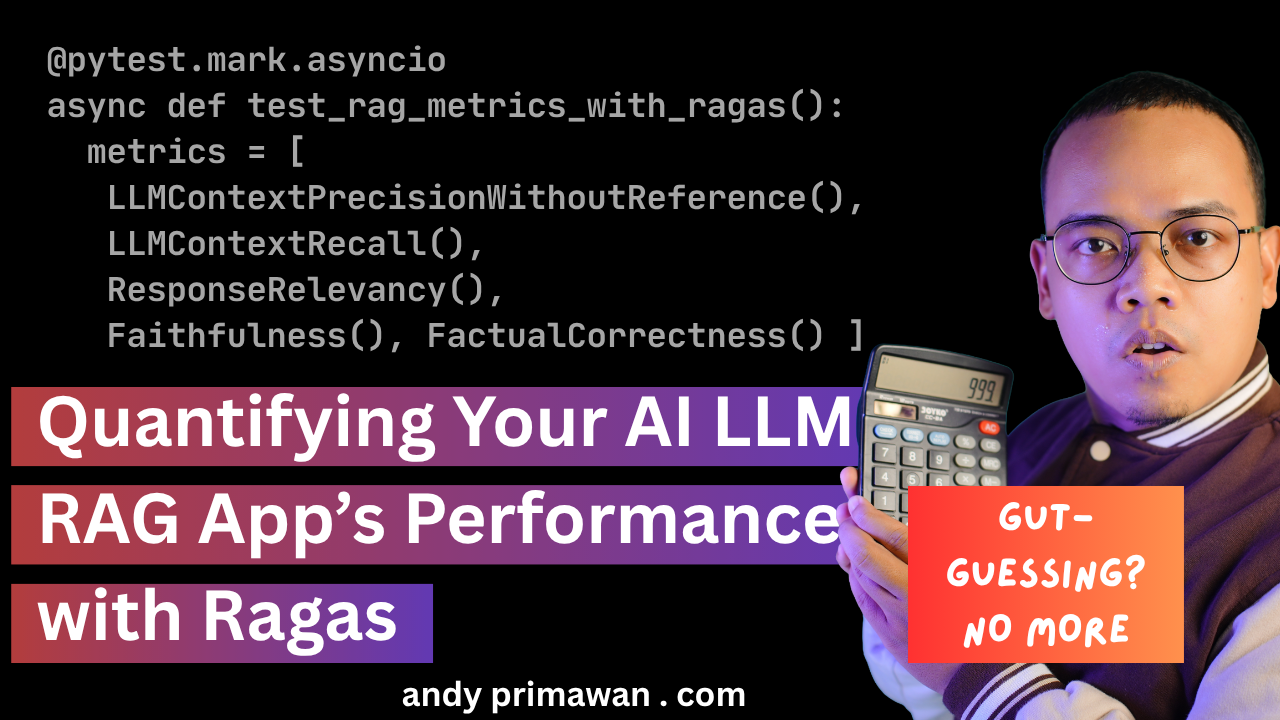

Quantifying Your AI LLM RAG App's Performance with Ragas - Andy Primawan

You've built a slick RAG application, but how do you prove it's any good? Stop the manual gut-checks. This guide shows how to use ragas to generate quantitative metrics, integrate with any language via simple REST APIs, and build an automated CI quality gate for your LLM app.

Introduction

You've been in the zone. The terminal is buzzing, and you're chaining together LangChain, LangGraph, or maybe directly using the OpenAI/Anthropic SDKs. After a flurry of coding, your RAG (Retrieval-Augmented Generation) chatbot is alive! It's retrieving documents from your vector store, the LLM is synthesizing answers, and everything feels like it's working. You and your team start throwing questions at it, and the responses look pretty good. The vibe is high.

But then, the hard questions begin, not for the chatbot, but for you.

The Problem: From "It Works" to "Is It Good?"

That initial excitement quickly meets a harsh reality. Manual testing and "gut-feeling" evaluations just don't scale.

- How do you measure quality objectively? Is a "pretty good" answer a 7/10 or a 9/10? Your opinion might differ from your product manager's or your user's.

- How do you detect regressions? You just tweaked a prompt or changed the chunking strategy. Did performance improve, or did you silently break five other use cases?

- How do you compare different models or prompts? Is

gpt-4oreally better thanclaude-4-sonnetfor your specific data? How much better? Is it worth the cost difference?

Without concrete, numeric metrics, you're flying blind. You can't confidently iterate, you can't prove improvement, and you can't establish a quality gate to prevent shipping a degraded experience.

The Solution: Automatic, Quantitative Evaluation with Ragas

Enter ragas, an open-source Python framework designed specifically for evaluating RAG pipelines. It provides a set of quantitative metrics that measure the performance of both the retrieval and the generation components of your system.

The best part? You don't need to rewrite your application. Even if your RAG app is written in Node.js, Go, or Rust, you can use ragas as long as you can expose two simple REST endpoints.

Here are the core ragas metrics that give you a 360-degree view of your application's performance:

- Context Precision: Evaluates whether the most relevant chunks appear at the top of the retrieved context ranking for a query.

- Context Recall: Measures how much relevant information was retrieved (how little was missed), using a reference for comparison.

- Response Relevancy: Rates how well the response addresses the user’s input, penalizing incomplete or redundant content.

- Faithfulness: Scores how factually consistent the response is with the retrieved context; higher means fewer hallucinations.

- Factual Correctness: Compares the response to a reference for factual accuracy by aligning claims and computing precision/recall/F1.

Step 1: Expose Two Simple Endpoints

In your existing RAG application (whatever language it's in), create two API endpoints:

GET /run-chatbot-rag-inference?query=<user_query>: This endpoint should take a user query, run your full RAG pipeline (retrieval and generation), and return the final chatbot response.

// Response from GET /run-chatbot-rag-inference?query=what is ragas

{

"answer": "Ragas is an open-source framework designed to help you evaluate your Retrieval-Augmented Generation (RAG) pipelines by measuring metrics like faithfulness and answer relevancy."

}

GET /find-relevant-knowledge-chunks?query=<user_query>: This endpoint should take a user query, perform the vector search, and return a JSON array of the retrieved context strings.

// Response from GET /find-relevant-knowledge-chunks?query=what is ragas

{

"relevant_knowledge_chunks": [

"Ragas is a framework that helps you evaluate your RAG pipelines...",

"Evaluating RAG pipelines is crucial for production...",

"Another tool for evaluation is TruLens..."

]

}

Step 2: Build Your "Golden Dataset"

This is arguably the most crucial step. You need a set of high-quality questions and their corresponding "ground truth" answers. This is what ragas will use as a benchmark. For example create a simple YAML file named dojotek-ai-chatbot-rag-ragas-dataset.yaml.

dojotek-ai-chatbot-rag-ragas-dataset.yaml:

# RAG evaluation samples

samples:

- query: "Does Selamat Hospital provide a sleep study for patients with sleep apnea?"

reference: "Yes, Selamat Hospital provides a sleep study for patients with sleep apnea."

- query: "What eye surgeries are available at Selamat Hospital for glaucoma and cataracts?"

reference: "Selamat Hospital offers cataract surgery using the latest phacoemulsification technology and various surgical techniques for glaucoma to manage high eye pressure."

By the way, in case you need the sample data, here the knowledge file I fed to my Dojotek AI Chatbot LLM RAG:

Step 3: Wire It Up with pytest and ragas

Now, we create a separate Python project for our evaluation logic. This is where ragas lives. We'll wrap our evaluation in a pytest test, allowing us to set clear pass/fail thresholds.

First, install the necessary libraries:

pip install -r requirements.txt

Next, create your test file, for example test_dojotek_ai_chatbot_rag_ragas.py.

Check the working Ragas Pytest working evaluation file:

test_dojotek_ai_chatbot_rag_ragas.py

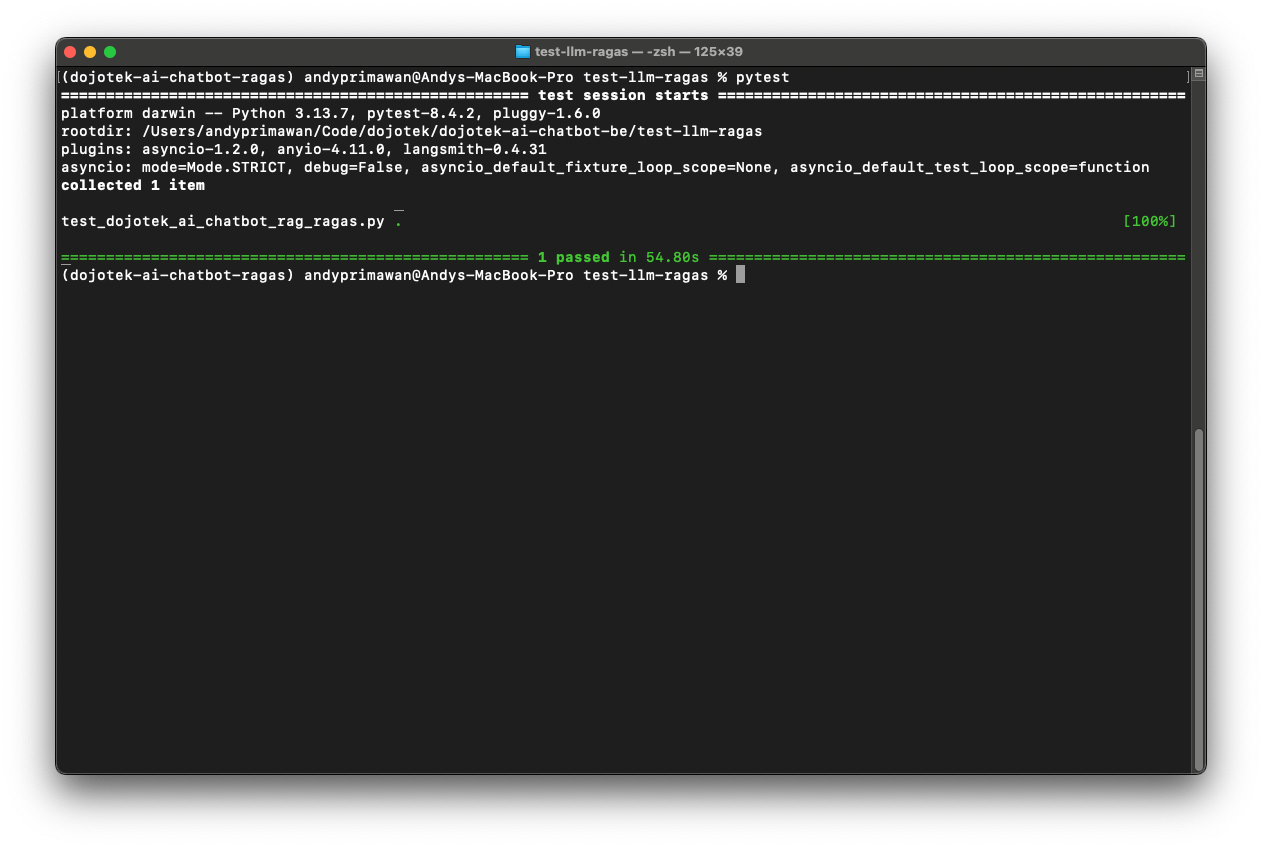

Step 4: Run as a CI Quality Gate

The final piece of the puzzle is automation. You can now run this pytest suite in your CI/CD pipeline (e.g., GitHub Actions, GitLab CI, Jenkins).

A typical workflow in your CI YAML file would be:

- Start your RAG application service.

- Run the evaluation script:

pytest. - The test will pass only if all metric scores are above your defined thresholds.

If a developer pushes a change that causes hallucinations to increase (lowering the faithfulness score), the CI pipeline will fail, blocking the change from being merged. Your quality gate is now active!

Conclusion

Moving from subjective, "gut-feel" testing to an automated, quantitative evaluation framework is a sign of a mature MLOps practice. By decoupling your evaluation logic from your core application using a simple API contract, you gain immense flexibility.

With ragas and pytest, you can now:

- Objectively measure your RAG application's quality.

- Track performance over time and confidently know if changes are improvements or regressions.

- Establish an automated quality gate in your CI/CD pipeline to protect your users from degraded performance.

Stop guessing and start measuring. Building a robust LLM application is not just about making it work; it's about proving it works well, every single time.

Example Code

You can find a complete, runnable example of this setup on GitHub:

- the Pytest and Ragas python code test_dojotek_ai_chatbot_rag_ragas.py

- the sample golden dataset dojotek-ai-chatbot-rag-ragas-dataset.yaml

- Python PIP dependencies list requirements.txt